Hierarchical Control

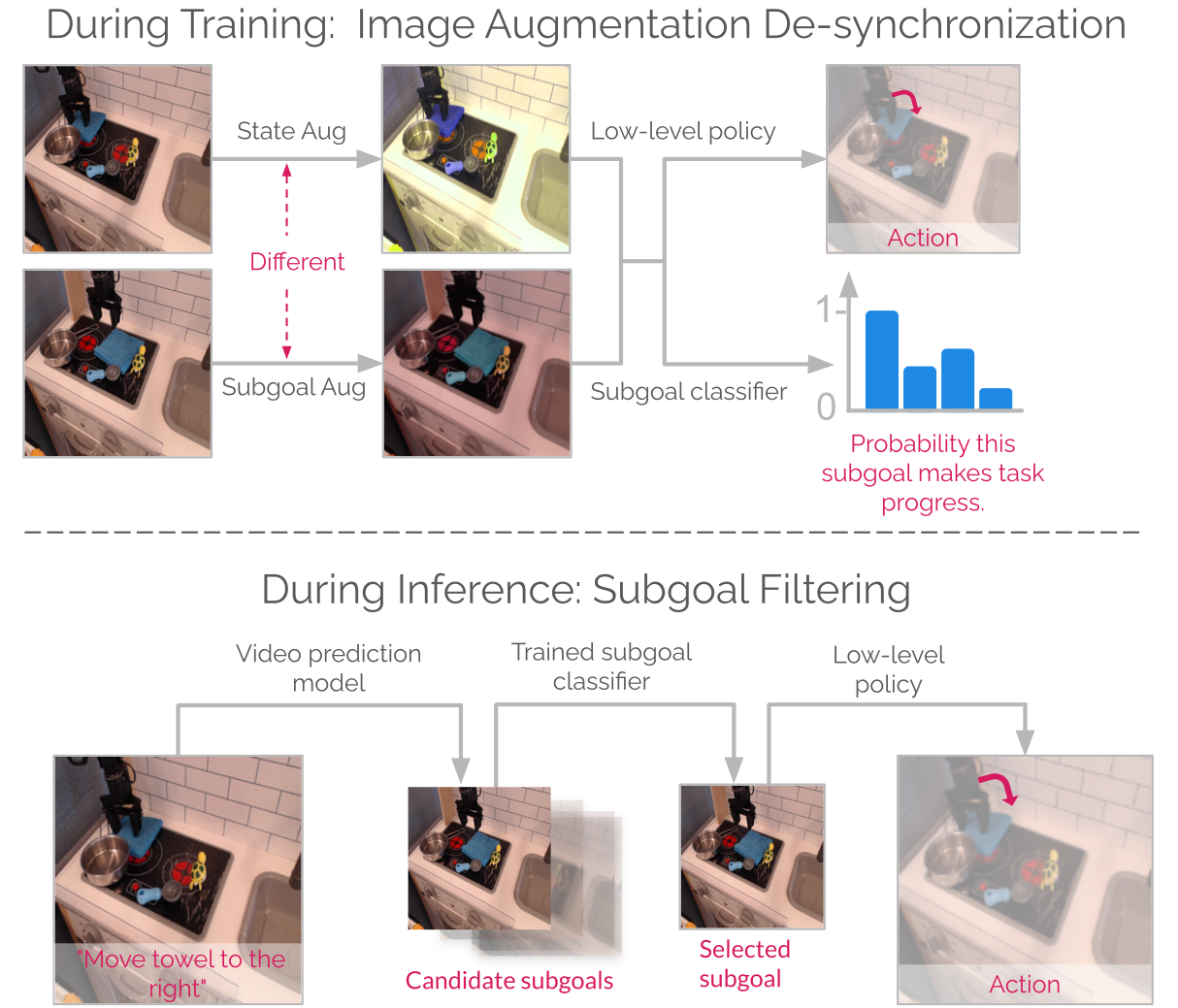

GHIL-Glue can be applied to existing hierarchical imitation learning methods that use video prediction models. GHIL-Glue filters out generated subgoal images that do not make task progress and trains low-level goal reaching policies to be robust to hallucinated artifacts in generated subgoal images.

Improving subgoal quality

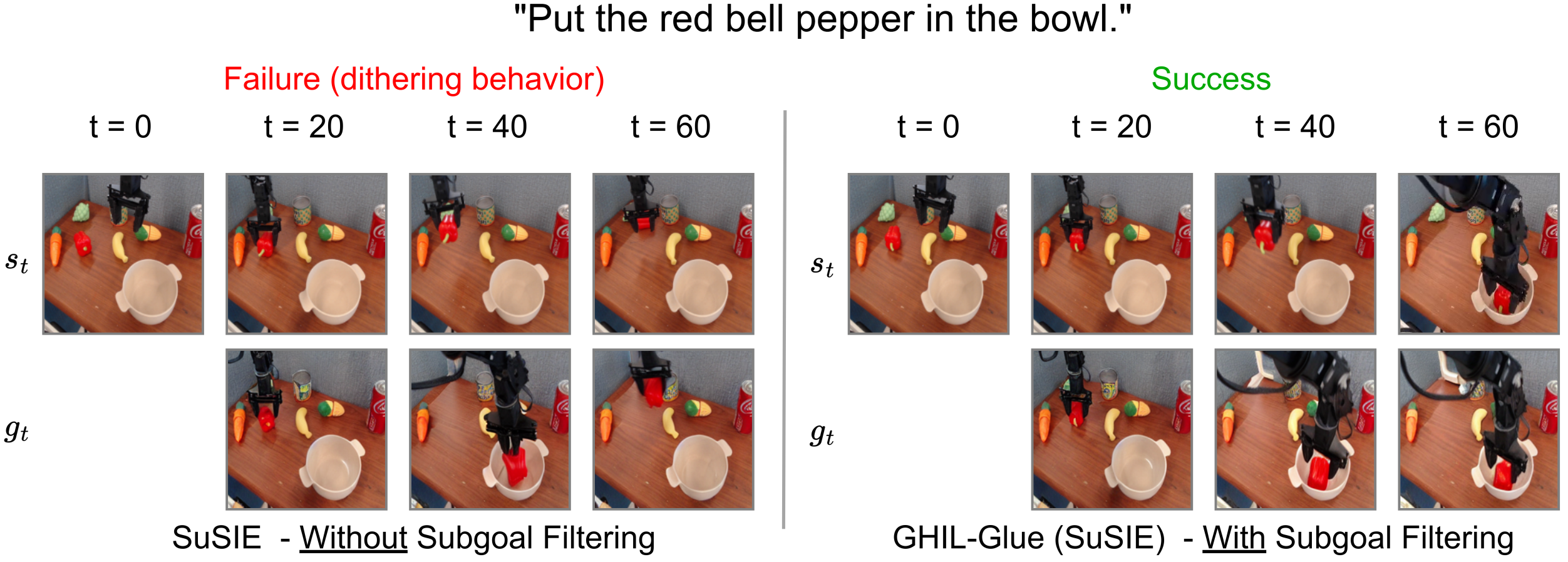

GHIL-Glue reduces dithering behavior in hierarchical policy methods and makes policies robust to hallucinated artifacts.

Zero-shot generalization

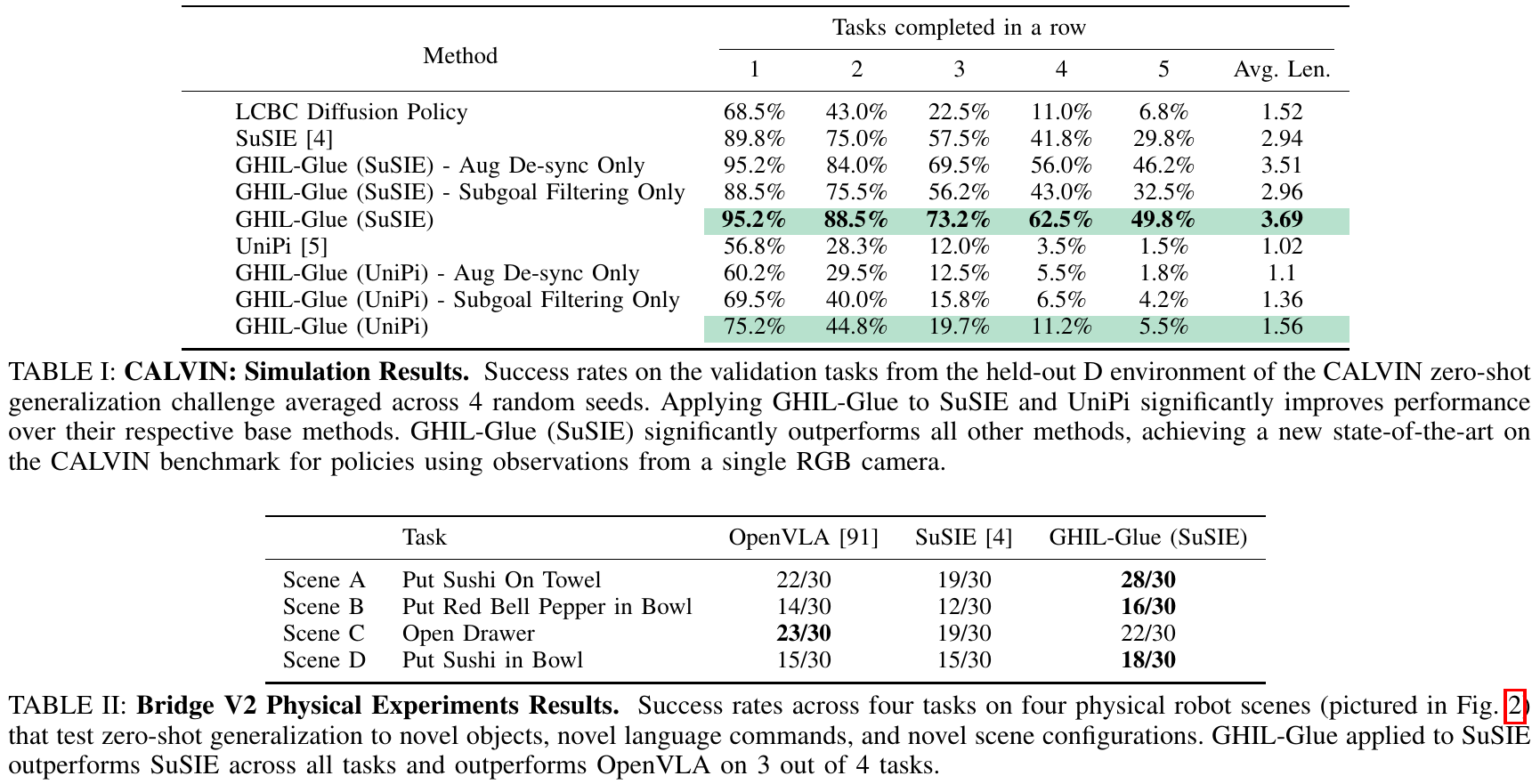

Applying GHIL-GLUE to existing hierarchical policy methods achieves state-of-the-art zero-shot generalization performance on both real and simulated robotics benchmarks.

Quantitative Results

GHIL-Glue achieves state-of-the-art performance on the CALVIN benchmark as well as on 5 physical environments based on the Bridge V2 robot platform.

Appendix

For additional ablations and implementation details, please see the Appendix.